Dr. Rafael Grossman’s Vision for Extended Reality and AI in Surgery

Dr. Rafael Grossman, a pioneering surgeon and technology enthusiast, took the stage at a recent healthcare innovation conference to share his perspective on the intersection of surgery, immersive technologies, and artificial intelligence. Known as the first surgeon to use Google Glass during live surgery, Grossman has built a career at the crossroads of clinical practice and frontier technologies. His talk was not merely an overview of new gadgets; it was a compelling narrative about reclaiming the human touch in medicine through the thoughtful use of advanced tools.

From Science Fiction to Surgical Reality

Grossman began with a short video clip that, while playful and futuristic, carried a deeper message: the future is here. The visuals depicted extended reality (XR) not as a far-off dream but as a working part of today’s operating rooms. As he explained, “This is something that is doable right now… bringing science, technology, and medicine to the human touch.”

A full-time trauma and general surgeon based in New England, Grossman emphasized that his interest in XR stems not from academic curiosity, but from lived experience in high-stakes clinical environments. “These are renders of my trauma bay and operating room. That’s where I live,” he said. It’s within this intense environment that he explores how tools like AR, VR, and AI can elevate surgical practice, education, and patient care.

Breaking the Geography Barrier in Medical Training

One of the key stories Grossman shared was his groundbreaking use of Google Glass—originally designed for consumer use—in an operating room to teach students. It wasn’t for publicity or novelty, he emphasized. It was simply a more effective way to teach. “The way we learn surgery is by looking. Seeing is everything,” he said. That moment opened the door to a larger question: how can immersive technology break barriers, not just in education, but also in real-time care delivery?

Historically, surgery education has followed the "See One, Do One, Teach One" model. With XR and spatial computing, that model can be dramatically enhanced. Grossman showcased how spatial computing—when the world around you becomes an interactive desktop—can empower not just the learner but also the practitioner, regardless of geography.

From MXR 1.0 to MXR 2.0: The Role of AI

Grossman coined the term MXR 2.0 to describe the evolution of medical extended reality powered by artificial intelligence. MXR 1.0 brought immersive experiences to medical education and procedural rehearsal. MXR 2.0 introduces a game-changing trio: haptics, multisensory integration, and generative AI.

With haptic gloves, for instance, students can feel tissues and simulate the tactile complexity of surgery in a virtual space. Grossman highlighted companies like Fundamental Surgery and Medivis, which integrate haptics into surgical training platforms. “Haptics with VR is better than VR alone,” he noted, citing validation studies that support this claim.

Shrinking the Hardware, Expanding the Vision

Throughout the talk, Grossman was animated by how rapidly the hardware is evolving. From the bulky prototypes of a decade ago to sleek devices like Apple’s Vision Pro and Meta’s upcoming Orion 2 smart glasses, XR devices are becoming smaller, more powerful, and increasingly invisible. “You wouldn’t know these glasses are supercomputers empowered by AI,” he said.

These form factors matter because they’re moving XR from the lab into everyday clinical use. Imagine a surgeon wearing glasses that discreetly display step-by-step procedural guidance, patient vitals, and imaging overlays—all without taking their eyes off the patient.

AI-Driven Spatial Healthcare

A powerful thread in Grossman’s narrative was the emergence of what he calls spatial healthcare—the idea that clinicians can access data, diagnostics, and therapeutic tools not on a 2D screen, but in a 3D space. “When your space becomes your desktop, the potential is limitless,” he said. This can revolutionize diagnosis, education, and even treatment.

For instance, in diagnostic imaging, XR allows clinicians to move beyond the traditional three axes and immerse themselves in a patient’s anatomy. “It’s like a fantastic voyage,” he said, referencing how one can navigate complex structures in real time, manipulate them, and view them from any perspective. This spatial fluidity can lead to earlier detection, better planning, and ultimately, more effective treatment.

Process Optimization: Beyond the Hype

Grossman stressed that while much attention has gone to the flashier aspects of XR, process optimization—mundane but critical—remains an underexplored opportunity. He showed the contrast between old-school paper charts and today’s “computers on wheels” that still clutter hospitals and separate clinicians from patients.

“What if instead of rolling a cart, your data floated in front of you?” he asked. He described his capstone project at Singularity University: a vision where XR overlays the patient’s EMR, imaging, and vitals directly into the clinician’s field of view—freeing up time, hands, and mental energy for human connection.

Real-Time Surgical Empowerment

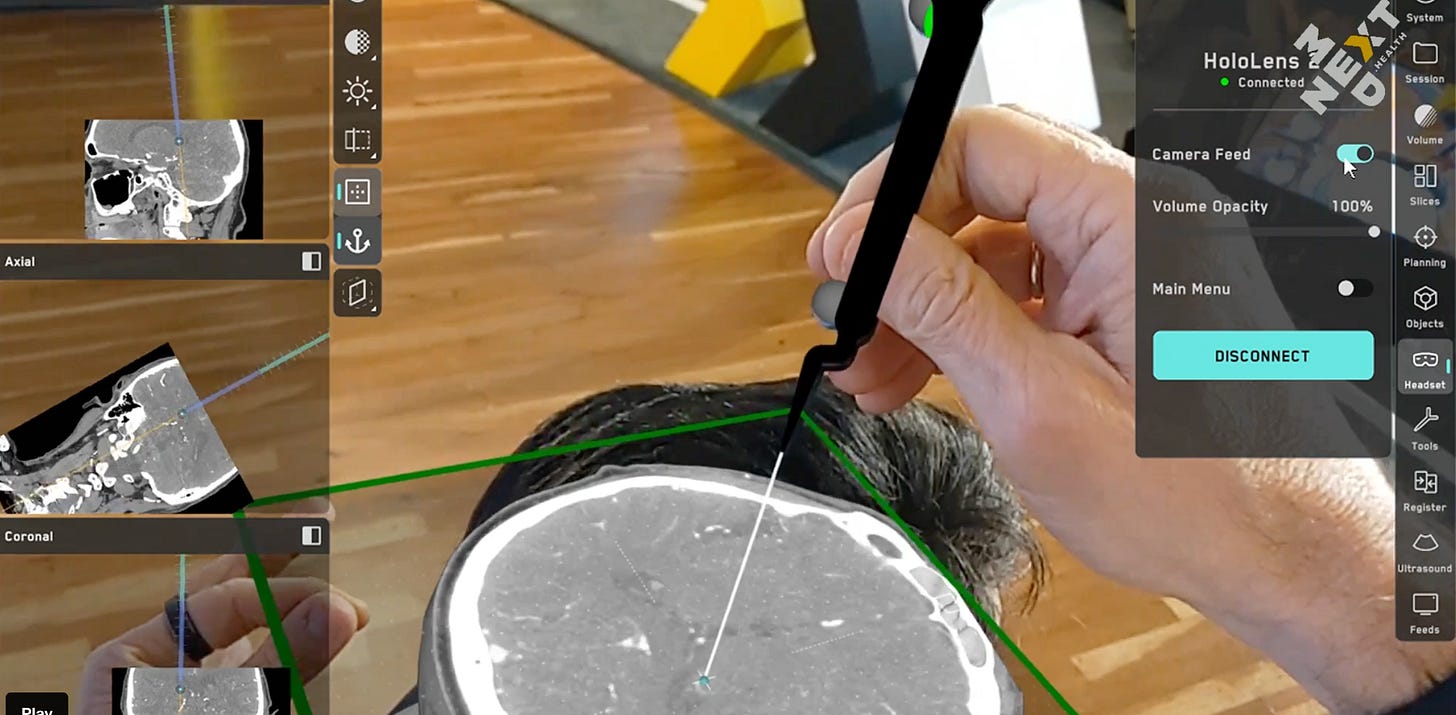

Perhaps the most jaw-dropping segment of Grossman’s talk centered on how XR and AI are already being used in surgery. Companies like Medivis are registering real patient data and combining it with virtual models in XR headsets. This enables “millimetric precision” in procedures like neurosurgery and spine surgery. Surgeons can virtually “see through” tissue layers, plan incisions, and execute biopsies—all while wearing a headset.

Screens, he emphasized, can now exist wherever you want them—in mid-air, adjacent to your tools, or overlaid onto anatomy. And all of it is customizable in real-time. “This isn’t just planning the surgery before the OR. This is doing the surgery with enhanced visual and spatial awareness.”

The Path to Autonomous Surgery

Grossman ended with one of the most provocative ideas of the talk: autonomous surgery. He acknowledged the skepticism but confidently predicted that in less than a decade, we will see AI-assisted or even autonomous surgical systems operating under human supervision.

Using NVIDIA’s Isaac platform as an example, he described how digital twin environments can be used to simulate anatomy, train AI systems through imitation learning, and eventually deploy them in the physical world. “I wasn’t a believer,” he admitted. “But now, I’m absolutely sure that it’s coming.”

AI and Empathy: Can Machines Be Kind?

As the conversation turns increasingly to AI’s capabilities, one question persists: Can AI show compassion? Grossman believes it can. Surveys suggest that patients already perceive AI-generated responses as empathetic or even more so than rushed human clinicians. With training and design, AI systems can emulate bedside manner, offer comfort, and enhance—not replace—the human experience.

The Augmented Human Clinician

Dr. Rafael Grossman’s talk was not a love letter to machines but a call to humanism through technology. “We must use tech to become more human, more humane,” he said. In his view, the future of healthcare doesn’t belong to robots, gadgets, or code alone. It belongs to augmented humans—clinicians who are empowered, not distracted, by the tools at their disposal.

In a world where clinicians are overwhelmed by data, burnout, and systemic inefficiencies, Grossman’s message is a breath of fresh air: smart tools used wisely can bring the focus back to what really matters—compassionate care, precise action, and connected healing. With XR and AI at our side, we may just reclaim the soul of medicine.